Meetup on Machine Learning / Speech Technologies

On March 20, 2018, we again invited guests to our meetup page “innovationnow” on the topic of “Machine Learning / Speech Technologies” to our premises. About 40 guests attended the two extremely exciting presentations on various technologies behind Alexa, Siri, and Co. Additionally, as at every one of our meetups, there was extensive catering provided by Stellwerk18.

The Presentations of the Evening:

Conversational Interfaces (Anton Spoeck):

In the first presentation, Toni from our team presented the differences between chatbots and frame-based dialog systems like Dialogflow (Google Home) and Lex (Alexa). He showed both current chatbots and robots like Sophia the Robot, as well as ELIZA, the first chatbot from 1966. The presentation was rounded off with an introduction to the Google framework Dialogflow and a real use case that we implemented with Dialogflow and integrated into Facebook Messenger as a “chatbot.” Available at: https://www.facebook.com/innMensaRo

Sophia the Robot

Getting More Information out of Speech (Prof. Dr. Korbinian Riedhammer):

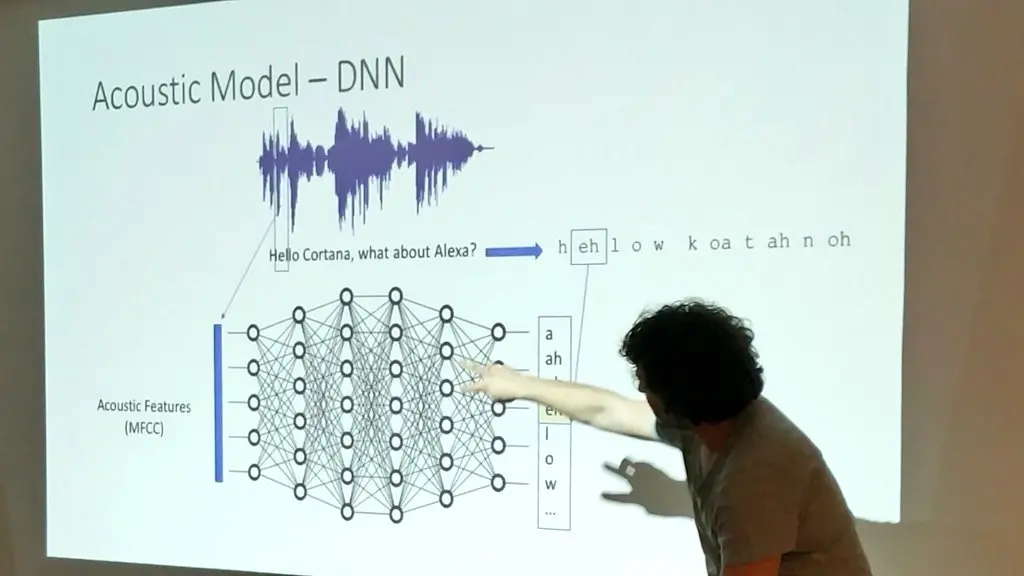

The second presentation started with many examples of how speech recognition has already found appeal in science fiction films for many decades. After the entertaining film examples from the past and their comparison to today’s feasibility, it continued with the scientific evolution of speech recognition. The guests were amazed that, for example, there was already a kind of speech recognition called Rex in 1920. To this day, speech recognition has developed so far that it has an error rate of only 5% (humans 3-7%) and can be processed in less than real time. Both in the cloud and on-premise, you can now find many services for almost all languages at a really very affordable price. After the guests learned what is possible with speech, it became more theoretical. In this part, the technology behind modern speech recognition (deep learning) was explained in a simplified way. It’s also amazing that the technology is not new. It simply couldn’t be used until a few years ago due to insufficient computing power. After the introduction to neural networks, there were finally a few notes on where you need to be careful when using speech.

Acoustic Model Deep Neuronal Network

Outlook

At innFactory, we continue to work on integrating speech and dialog systems into apps. If you need more information, please contact us by email.

Save The Date: May 2, 2018 next meetup on Virtual Reality in(n) Factory

Tobias Jonas

Tobias Jonas